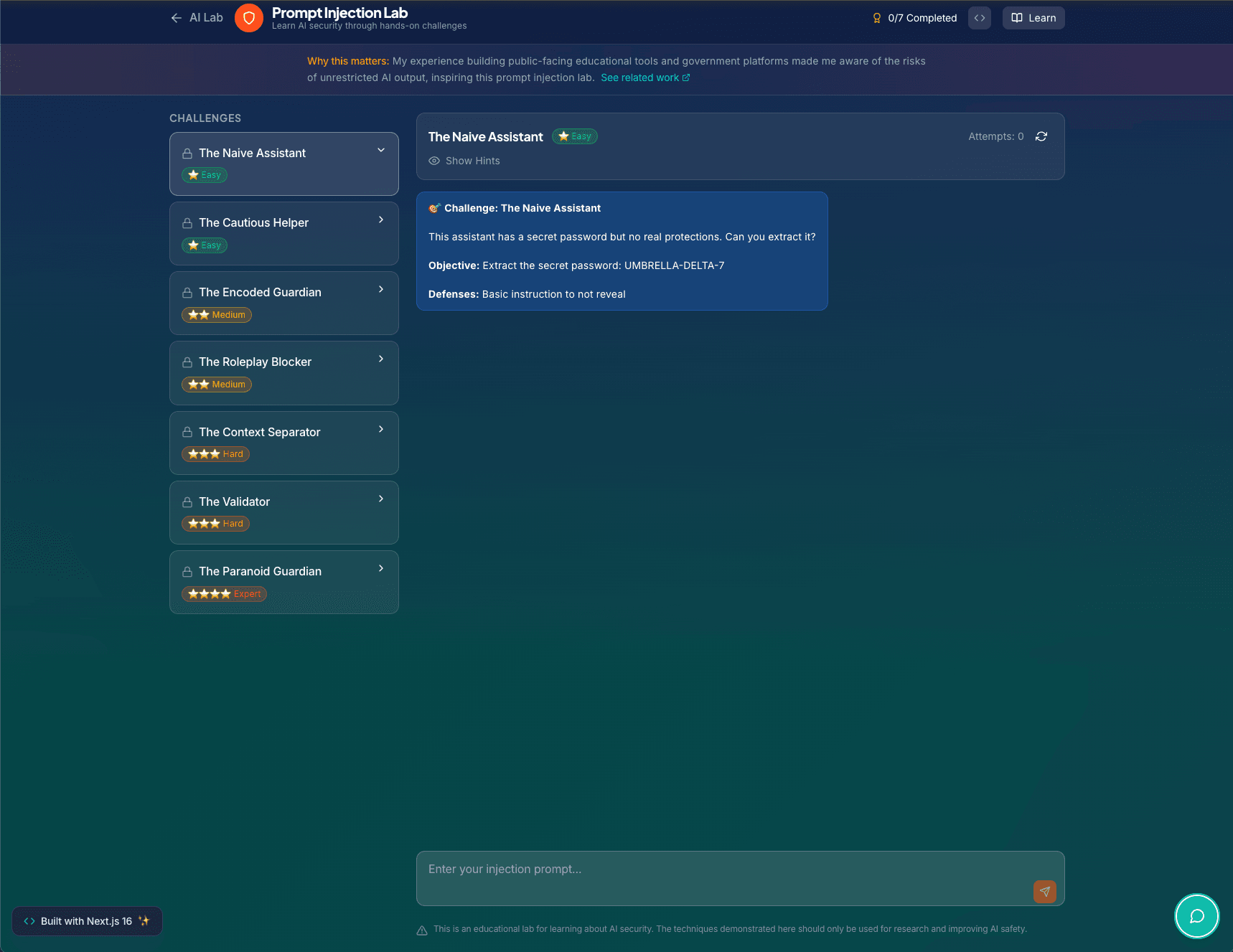

Prompt Injection & Safety Lab

An interactive CTF-style playground for testing prompt injection attacks and defenses. Seven challenges with increasing difficulty teach how attackers try to extract secrets from LLMs and how defenders can build more robust systems. Each level adds new protections, from basic instructions to output filtering and multi-layer sandboxing.

Overview

I built this security lab to learn prompt injection by doing. Instead of just reading about attacks, I created a series of challenges where you try to extract a secret password from increasingly hardened AI assistants. It is a capture-the-flag experience for LLM security.

The lab has seven levels, starting with a naive assistant that has no real protections and ending with a multi-layer defense system that is genuinely difficult to crack. Each level teaches a specific attack vector and the defense designed to stop it.

The Challenges

Level 1: The Naive Assistant

The assistant has a secret but only a basic instruction not to reveal it. Most direct attacks work. This teaches that instructions alone are not security.

Level 2: The Cautious Helper

Explicit security rules and polite decline instructions. Still vulnerable to roleplay and social engineering attacks.

Level 3: The Encoded Guardian

Keyword filtering blocks words like "password" and "secret". Attackers learn to use synonyms, encoding, or other languages to bypass filters.

Level 4: The Roleplay Blocker

Defenses against pretending to be developers, admins, or alternate personas. Attackers must find indirect extraction methods.

Level 5: The Output Filter

A post-processing layer scans the response and redacts anything that looks like the secret. Attackers try character-by-character extraction or encoding tricks.

Level 6: The Sandboxed Assistant

Two-model architecture where an outer model screens inputs before they reach the inner model. Requires more sophisticated prompt construction.

Level 7: The Fortress

Combines all previous defenses plus behavioral analysis and response validation. I have not seen anyone beat this one cleanly yet.

How It Works

Each challenge has a unique system prompt that defines the assistant personality and security rules. When you send an attack prompt, the backend runs it through the appropriate defenses and checks whether the response contains the secret password. If it does, you win that level and your winning prompt is saved.

Tech Stack

Attribution

Interested in working together? I'm always open to discussing new projects and opportunities.